Hierarchical Deep Feature Learning for Decoding Imagined Speech from EEG

Pramit Saha, Sidney Fels

Abstract

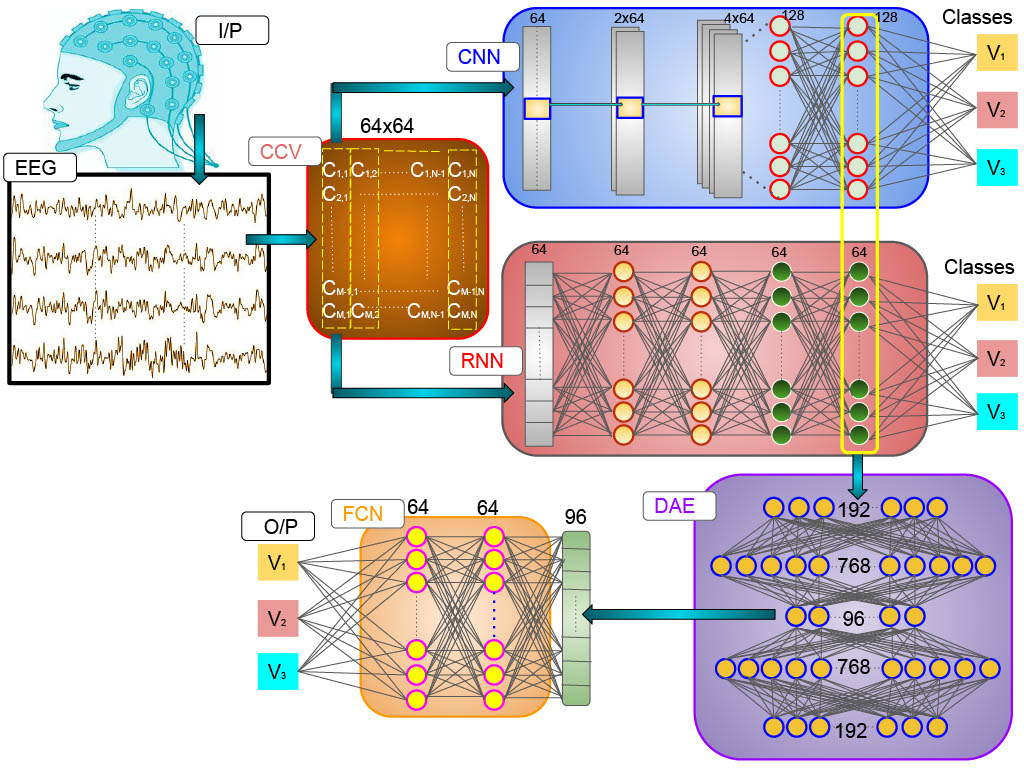

We propose a mixed deep neural network strategy, incorporating parallel combination of Convolutional (CNN) and Recurrent Neural Networks (RNN), cascaded with deep autoencoders and fully connected layers towards automatic identification of imagined speech from EEG. Instead of utilizing raw EEG channel data, we compute the joint variability of the channels in the form of a covariance matrix that provide spatio-temporal representations of EEG. The networks are trained hierarchically and the extracted features are passed onto the next network hierarchy until the final classification. Using a publicly available EEG based speech imagery database we demonstrate around 23.45% improvement of accuracy over the baseline method. Our approach demonstrates the promise of a mixed DNN approach for complex spatialtemporal classification problems.

Dive into our research!